Ushering in a ‘Smart’ Future Responsibly

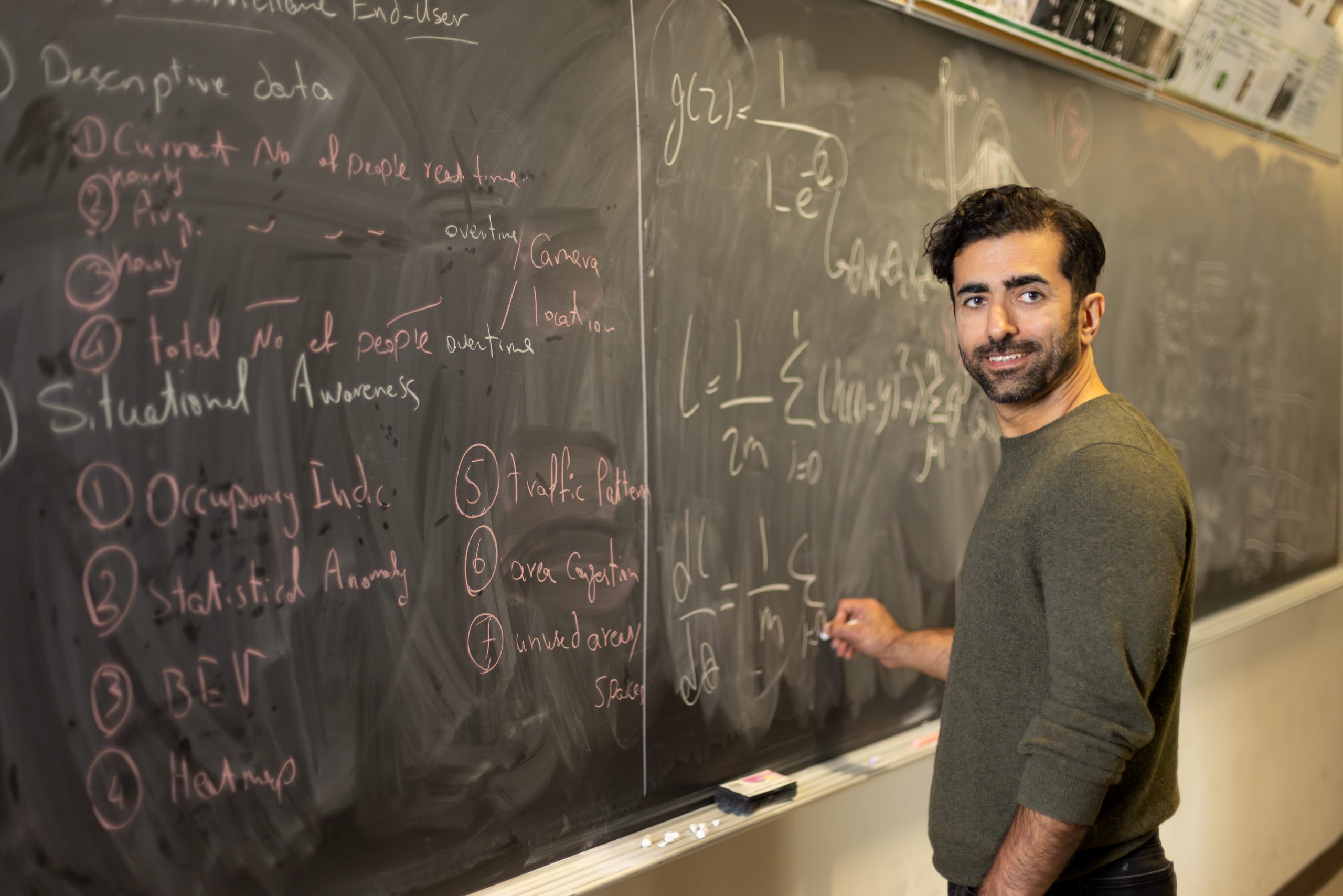

Researcher Hamed Tabkhi discovers the key to using AI for public safety: involve the community in the design

By Yen Duong

In Hollywood, powerful evil artificial intelligences take over governments, destroy planets and predict our every move. In real life, the problems with AI are often those of their creators — perpetuating and worsening gender and racial biases. To fight these biases and restore public trust in AI, researchers are turning to the public.

“The question is how to create artificial intelligence responsibly and in an ethical manner to address real-world problems,” said Hamed Tabkhi, who is working with community stakeholders and researchers to “co-create and co-design” solutions to public safety with AI. “Public opinion is a priority for our work: what are people’s needs, what are their privacy concerns, what kind of system do we need to design?”

In Tabkhi’s (literally) million-dollar idea, his AI system helps law enforcement without breaking the trust of people the police are meant to protect. Residents’ crime and policing concerns directly inform the design of an AI system to address neighborhood safety specific to their community, and prevent misunderstanding about law enforcement’s use of the tools.

“We are considering how to use responsible AI to better detect criminal activities or anything that will be dangerous to the public but at the same time, respect the privacy of citizens.”

–Hamed Tabkhi

“We don’t want to inadvertently over-police the neighborhoods we intend to support,” said Tabkhi, who is an associate professor of electrical and computer engineering in the William States Lee College of Engineering at UNC Charlotte. “What we have yet to solve is: What is the right balance? How can we use a responsible AI to better detect criminal activities or anything that will be dangerous to the public but at the same time, respect the privacy of citizens?”

SETTING UP THE NETWORK

In 2016, Tabkhi joined UNC Charlotte’s faculty and created his lab, moving on from his thesis research to computer vision and how computers process images. He immediately established networking prowess by putting together a National Science Foundation proposal with four collaborators from the criminal justice and criminology, electrical and computer engineering, and civil and environmental engineering departments and the Center for Applied Geographic Information Science. The team received $2 million for the inaugural year of the NSF Smart and Connected Communities program.

“I was excited about this program because, in contrast with the traditional way of doing engineering where you’re isolated in your lab, build a technology and hope for the best, the aim is to use technology and AI to address actual problems and social issues,” Tabkhi said. “This program pushes us to explore those areas with real pilots and working systems.

From the very beginning, the team partnered with the city of Charlotte Office of Sustainability to connect with community members. They started with visits to farmers markets, public libraries and community centers to speak with stakeholders and ask them about their public safety concerns — activities that they continue today.

The team also met with leaders from the Charlotte Mecklenburg Police Department and other area businesses and hospitals. After organizing a summit in 2019 to discuss AI and public safety with various stakeholders, they decided to make a working pilot in the community to get better feedback. They partnered with Central Piedmont Community College, particularly with its criminal justice department chair Jeri Guido ’07.

“This partnership is a great example of how research universities can partner with community colleges to co-design and co-create a technology directly for the communities and stakeholders in the general public.”

–Hamed Tabkhi

HOW THE AI WORKS

The COVID-19 pandemic slowed research across the country, but Tabkhi’s team took advantage of the relative quiet at CPCC and commandeered a room to create an AI lab there. Now, their AI watches 40 CCTV security cameras across campus, strips out identifying information and pings notifications about any behavior it deems anomalous.

Figuring out anomalous behavior is the trickiest part of creating the team’s AI. They painstakingly annotated hundreds of hours of security camera video from a nearby parking lot and uploaded the data set so other research teams could use it to train AI. They also created demonstrations of anomalous behavior (e.g., fighting, stealing) with actors to show their AI.

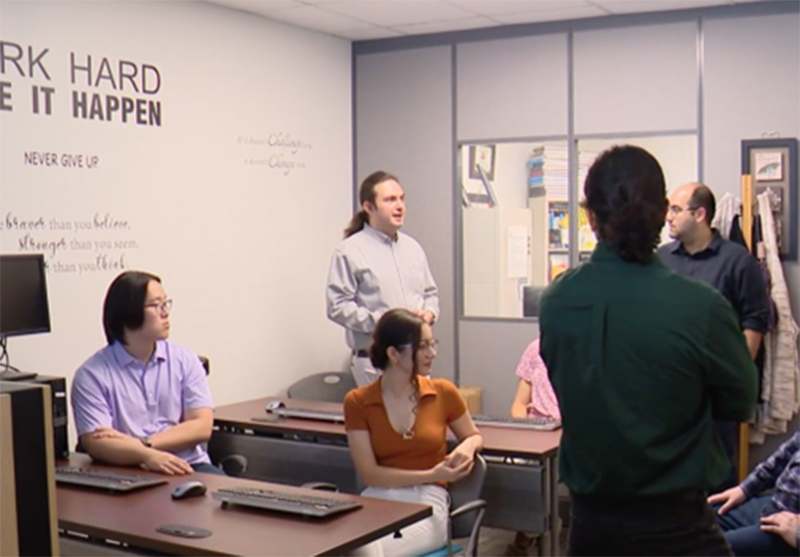

Tabkhi and his research team at Central Piedmont Community College

“It’s very difficult to generalize anomaly detection because something that might be an anomaly in a hospital isn’t necessarily an anomaly in a shopping mall or a parking lot,” Tabkhi pointed out.

Ultimately, the AI itself will be able to identify anomalies without needing examples. That’s the “intelligence” in artificial intelligence; by watching far more hours of normal behavior than any one human would, it will learn what abnormal behavior looks like. The more unusual part of this project revolves around the community’s concerns.

“What is unique about our work is that we are doing it by removing identifiable information — without using faces, skin color or gender,” Tabkhi said. “We’re doing this to remove biases, assuring fairness in the AI process and its outcomes.”

At their community visits, the team demonstrates what the AI is observing. Any identifying information is cut out as people’s images are transformed into a pixelated avatar. That’s how the AI watches people’s behavior rather than their appearance. The AI also doesn’t choose what to do; it only pings whomever would usually be watching the CCTVs when it detects something that is not normal.

“At the end of the day, AI will not be the decision maker; it’s just another channel of information,” Tabkhi said. “We as humans cannot observe all the cameras at the same time. At the same time, we don’t want to leave all the decision making to the AI, so that’s why we are finding the right balance.”

LOOKING AHEAD

Last year, after the team reported its successes to NSF funders, the project was “highlighted as a success story” at a conference in Washington, D.C. With Guido and CPCC added to the grant as community partners, they received an additional $400,000 from the NSF to expand the pilot program as well as $550,000 to support commercialization.

Since then, they’ve continued visiting the community, developing the anomaly detection capabilities of their AI and creating more relationships with other entities to figure out how they can integrate the technology into existing security infrastructures.

In a separate project working to integrate AI into an existing problem, Tabkhi and collaborator Mona Azarbayjani at the David R. Ravin School of Architecture at UNC Charlotte are in the early stages of working on a “smart bus” system with the Charlotte Area Transit System. As an alternative to the traditional system of a fixed bus schedule where people wait at bus stops for their bus and follow fixed routes, this team is developing an app that will create a more “Uber-like” bus experience.

“Building hard transit infrastructure like light rail takes many years and is very costly — we have existing bus infrastructure, why not use it smarter?” Tabkhi said excitedly. “CATS is doing a great job; they are already adding significant data analytics and data mining into the system and embracing technology.”

Riders would enter their information into the app (e.g., the time they need to be at work, the grocery store, daycare or home). The app then would direct them to the correct station and bus, and instruct buses to fill demands rather than stick to a fixed route.

Tabkhi projects that by adapting away from the traditional fixed routes and bus schedules, CATS will increase bus ridership by almost one-third and cut average travel times in half. Ultimately, that will mean fewer cars on the road and lower levels of carbon emissions.

Besides research and collaborations, Tabkhi also focuses on workforce development and education. He has mentored over two dozen graduate students and many undergraduates, including several who later joined the graduate electrical and computer engineering program. Outside the lab, Tabkhi helped establish a new machine learning concentration for undergraduates at UNC Charlotte. In the first year, 30 students registered; last year there were 50, and 70-plus are enrolled this fall.

“We truly believe that with the current acceleration of AI, you really won’t need a graduate degree or a Ph.D. to work in AI,” Tabkhi said, citing UNC Charlotte undergraduate alumni working at Bank of America who are using AI expertise to detect and prevent fraud. “With the proper education, and knowing the principles and how to use AI, students from all levels will be prepared for very competitive careers in the tech industry.”

MORE ABOUT AI/SMART CITIES AT UNC CHARLOTTE:

artificial intelligence as a tool in public safety

Students Using Artificial Intelligence In Our Community

Ushering in a ‘Smart’ Future Responsibly

Researcher Hamed Tabkhi discovers the key to using AI for public safety: involve the community in the design

By Yen Duong

In Hollywood, powerful evil artificial intelligences take over governments, destroy planets and predict our every move. In real life, the problems with AI are often those of their creators — perpetuating and worsening gender and racial biases. To fight these biases and restore public trust in AI, researchers are turning to the public.

“The question is how to create artificial intelligence responsibly and in an ethical manner to address real-world problems,” said Hamed Tabkhi, who is working with community stakeholders and researchers to “co-create and co-design” solutions to public safety with AI. “Public opinion is a priority for our work: what are people’s needs, what are their privacy concerns, what kind of system do we need to design?”

In Tabkhi’s (literally) million-dollar idea, his AI system helps law enforcement without breaking the trust of people the police are meant to protect. Residents’ crime and policing concerns directly inform the design of an AI system to address neighborhood safety specific to their community, and prevent misunderstanding about law enforcement’s use of the tools.

“We are considering how to use responsible AI to better detect criminal activities or anything that will be dangerous to the public but at the same time, respect the privacy of citizens.”

–Hamed Tabkhi

“We don’t want to inadvertently over-police the neighborhoods we intend to support,” said Tabkhi, who is an associate professor of electrical and computer engineering in the William States Lee College of Engineering at UNC Charlotte. “What we have yet to solve is: What is the right balance? How can we use a responsible AI to better detect criminal activities or anything that will be dangerous to the public but at the same time, respect the privacy of citizens?”

SETTING UP THE NETWORK

In 2016, Tabkhi joined UNC Charlotte’s faculty and created his lab, moving on from his thesis research to computer vision and how computers process images. He immediately established networking prowess by putting together a National Science Foundation proposal with four collaborators from the criminal justice and criminology, electrical and computer engineering, and civil and environmental engineering departments and the Center for Applied Geographic Information Science. The team received $2 million for the inaugural year of the NSF Smart and Connected Communities program.

“I was excited about this program because, in contrast with the traditional way of doing engineering where you’re isolated in your lab, build a technology and hope for the best, the aim is to use technology and AI to address actual problems and social issues,” Tabkhi said. “This program pushes us to explore those areas with real pilots and working systems.

From the very beginning, the team partnered with the city of Charlotte Office of Sustainability to connect with community members. They started with visits to farmers markets, public libraries and community centers to speak with stakeholders and ask them about their public safety concerns — activities that they continue today.

The team also met with leaders from the Charlotte Mecklenburg Police Department and other area businesses and hospitals. After organizing a summit in 2019 to discuss AI and public safety with various stakeholders, they decided to make a working pilot in the community to get better feedback. They partnered with Central Piedmont Community College, particularly with its criminal justice department chair Jeri Guido ’07.

“This partnership is a great example of how research universities can partner with community colleges to co-design and co-create a technology directly for the communities and stakeholders in the general public.”

–Hamed Tabkhi

HOW THE AI WORKS

The COVID-19 pandemic slowed research across the country, but Tabkhi’s team took advantage of the relative quiet at CPCC and commandeered a room to create an AI lab there. Now, their AI watches 40 CCTV security cameras across campus, strips out identifying information and pings notifications about any behavior it deems anomalous.

Figuring out anomalous behavior is the trickiest part of creating the team’s AI. They painstakingly annotated hundreds of hours of security camera video from a nearby parking lot and uploaded the data set so other research teams could use it to train AI. They also created demonstrations of anomalous behavior (e.g., fighting, stealing) with actors to show their AI.

Tabkhi and his research team at Central Piedmont Community College

“It’s very difficult to generalize anomaly detection because something that might be an anomaly in a hospital isn’t necessarily an anomaly in a shopping mall or a parking lot,” Tabkhi pointed out.

Ultimately, the AI itself will be able to identify anomalies without needing examples. That’s the “intelligence” in artificial intelligence; by watching far more hours of normal behavior than any one human would, it will learn what abnormal behavior looks like. The more unusual part of this project revolves around the community’s concerns.

“What is unique about our work is that we are doing it by removing identifiable information — without using faces, skin color or gender,” Tabkhi said. “We’re doing this to remove biases, assuring fairness in the AI process and its outcomes.”

At their community visits, the team demonstrates what the AI is observing. Any identifying information is cut out as people’s images are transformed into a pixelated avatar. That’s how the AI watches people’s behavior rather than their appearance. The AI also doesn’t choose what to do; it only pings whomever would usually be watching the CCTVs when it detects something that is not normal.

“At the end of the day, AI will not be the decision maker; it’s just another channel of information,” Tabkhi said. “We as humans cannot observe all the cameras at the same time. At the same time, we don’t want to leave all the decision making to the AI, so that’s why we are finding the right balance.”